|

Hi! I am a third-year PhD student at the University of Maryland, CLIP Lab, advised by Mohit Iyyer, with a research focus on natural language processing. My work lies at the intersection of evaluation and alignment in long-context scenarios. I initially started my PhD at UMass NLP and later transferred to UMD along with my advisor. Before starting my PhD, I worked at Hyundai Motors Group and LG Electronics as a research engineer. I was selected as a specialist in AI and conducted research at CMU LTI as a visiting scientist mentored by Jaime Carbonell. |

|

|

|

|

|

Yapei Chang, Yekyung Kim, Michael Krumdick, Amir Zadeh, Chuan Li, Chris Tanner, Mohit Iyyer NeurIPS 2025 Code |

|

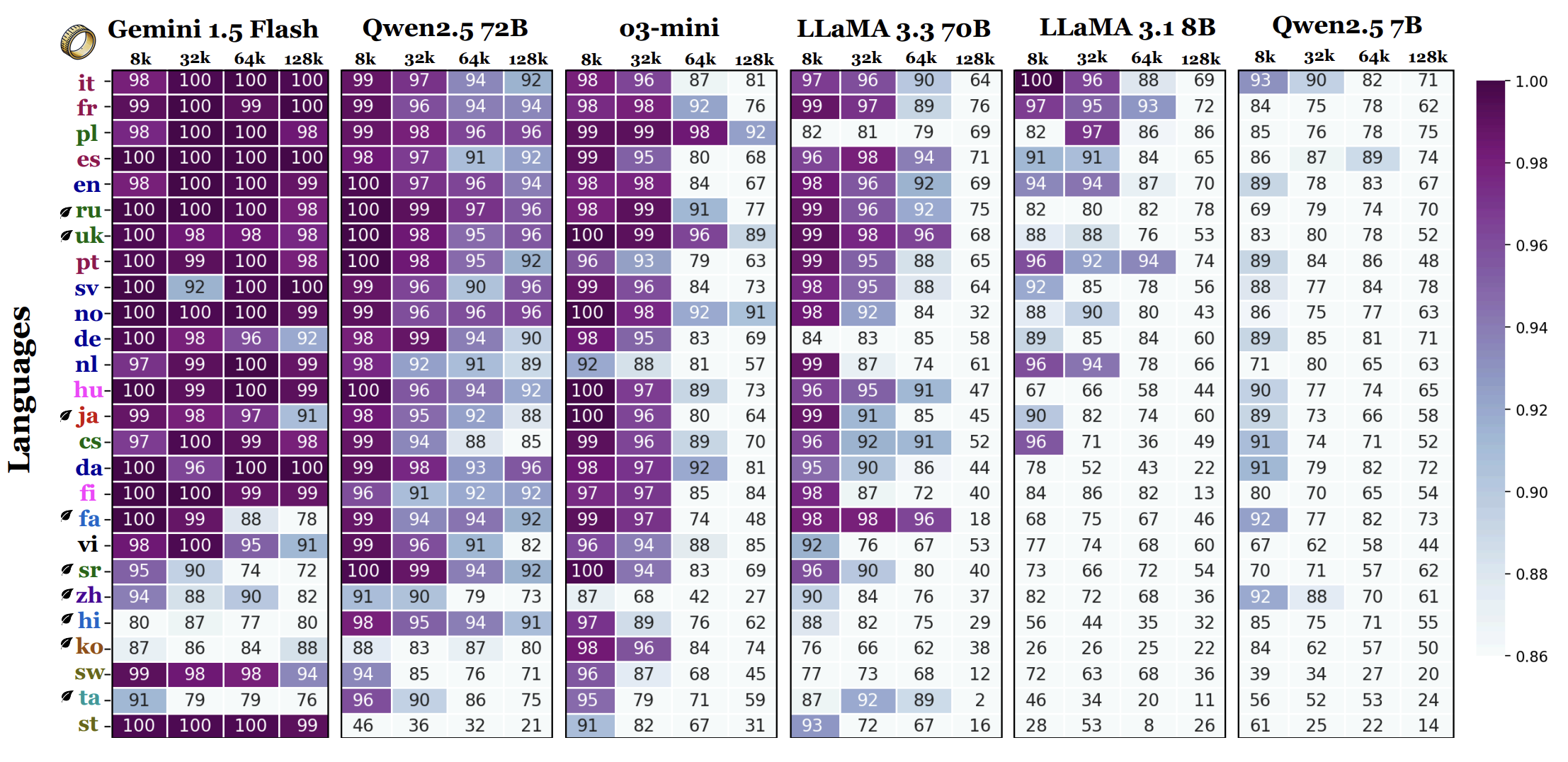

Yekyung Kim, Jenna Russell, Marzena Karpinska, Mohit Iyyer COLM 2025 Code |

|

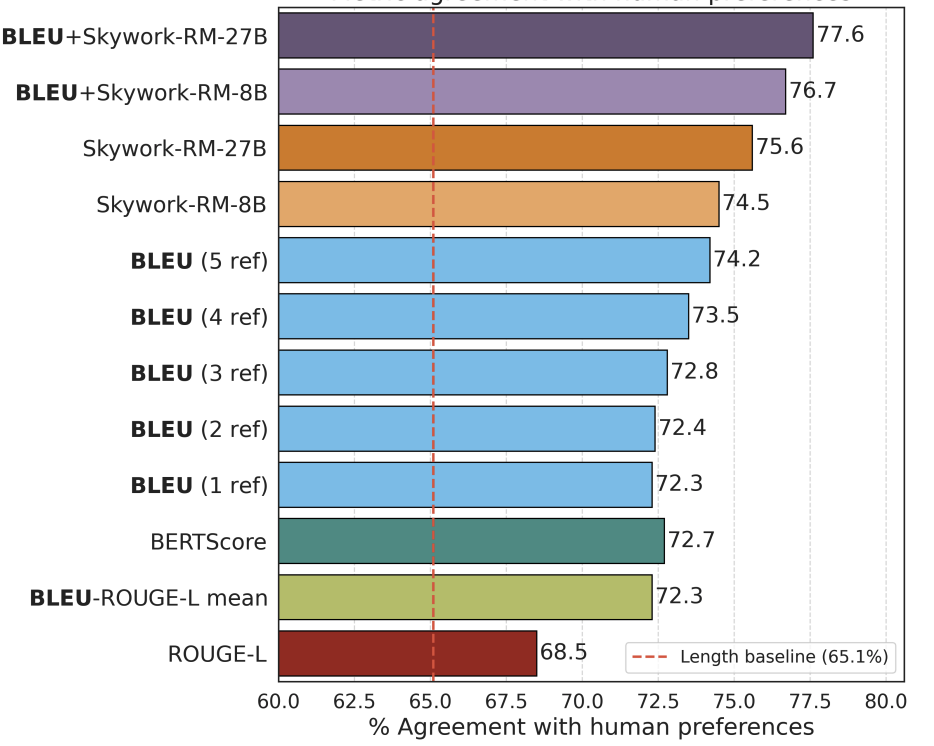

Yixiao Song, Yekyung Kim, Mohit Iyyer EMNLP Findings 2024 Code |

|

|

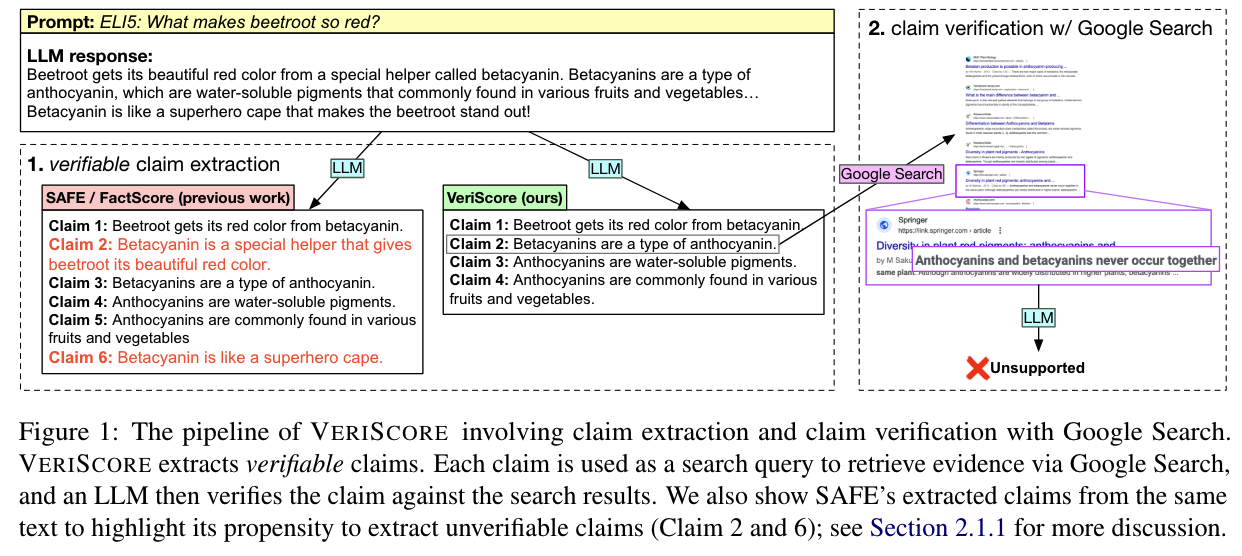

Yekyung Kim, Yapei Chang, Marzena Karpinska, Aparna Garimella, Varun Manjunatha, Kyle Lo, Tanya Goyal, Mohit Iyyer COLM 2024 Dataset + Code |

|

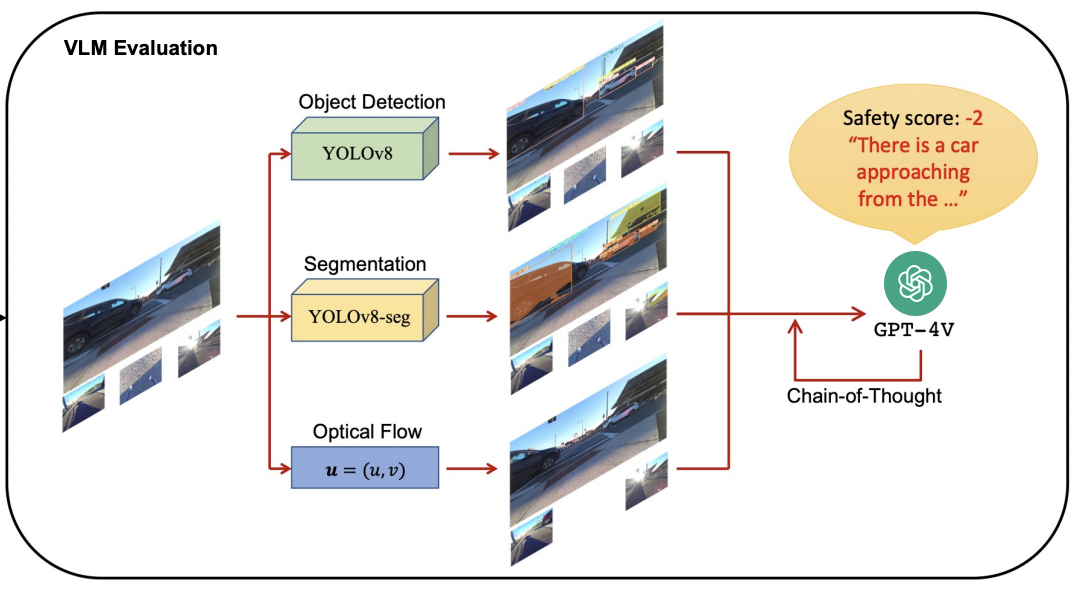

Hochul Hwang, Sunjae Kwon, Yekyung Kim, Donghyun Kim 21st International Conference on Ubiquitous Robots |

|

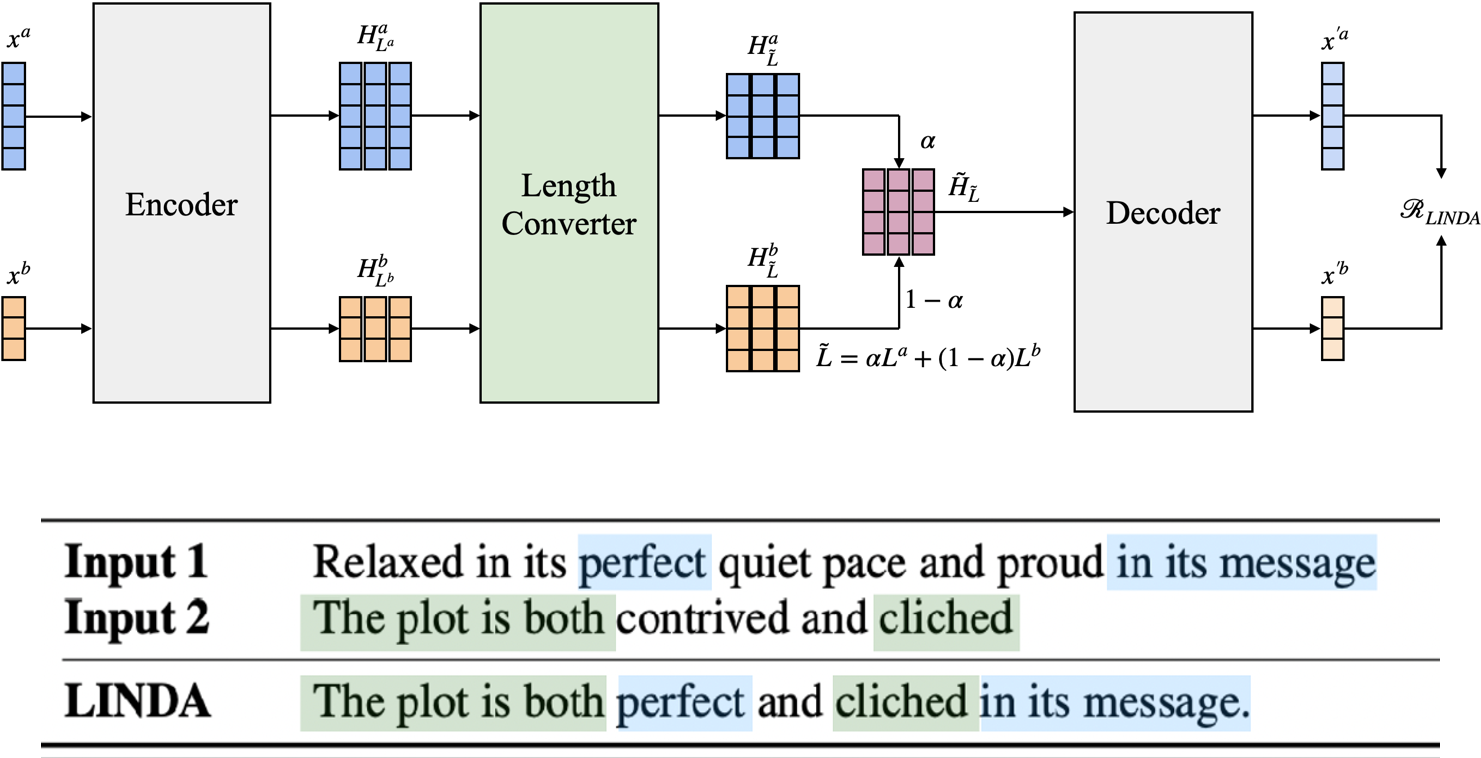

Yekyung Kim, Seohyeong Jeong, Kyunghyun Cho arXiv |

|

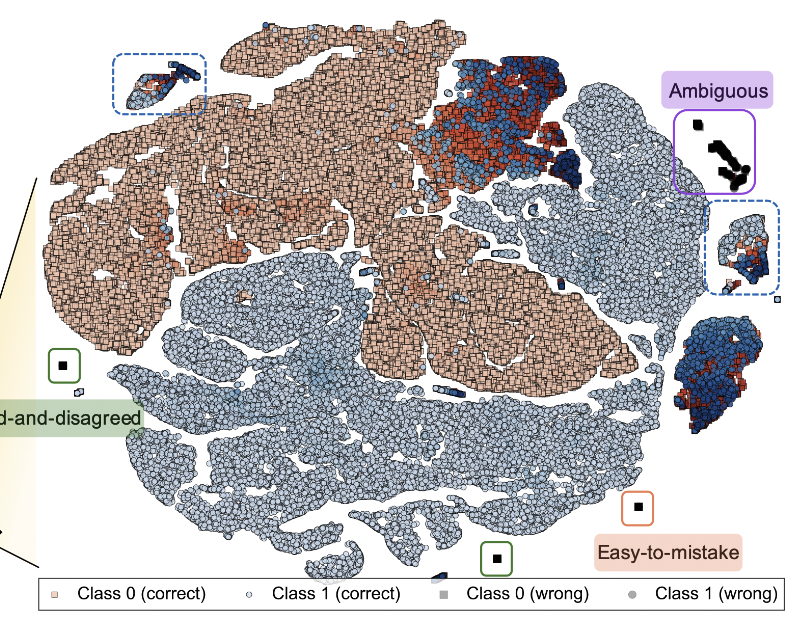

Jaehyung Kim, Yekyung Kim, Karin Johanna Denton de Langis, Jinwoo Shin, Dongyeop Kang ACL 2023 Code |

|

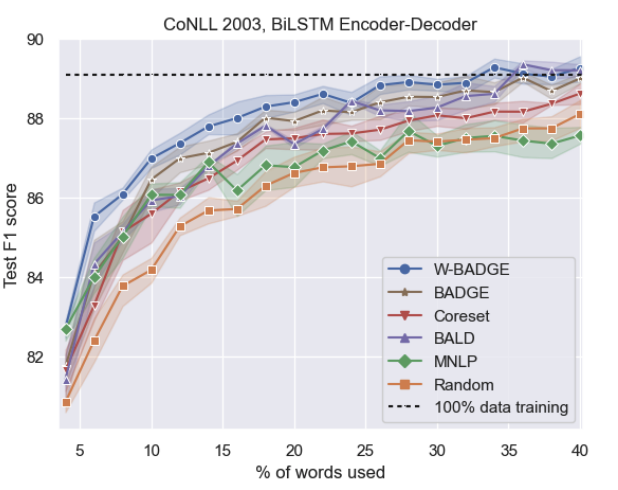

Ryan Koo, Yekyung Kim, Dongyeop Kang, Jaehyung Kim AAAI 2024 Student Abstract and Poster Program |

|

Yekyung Kim Workshop on Life-long Learning for Spoken Language Systems at AACL, 2021 |

|

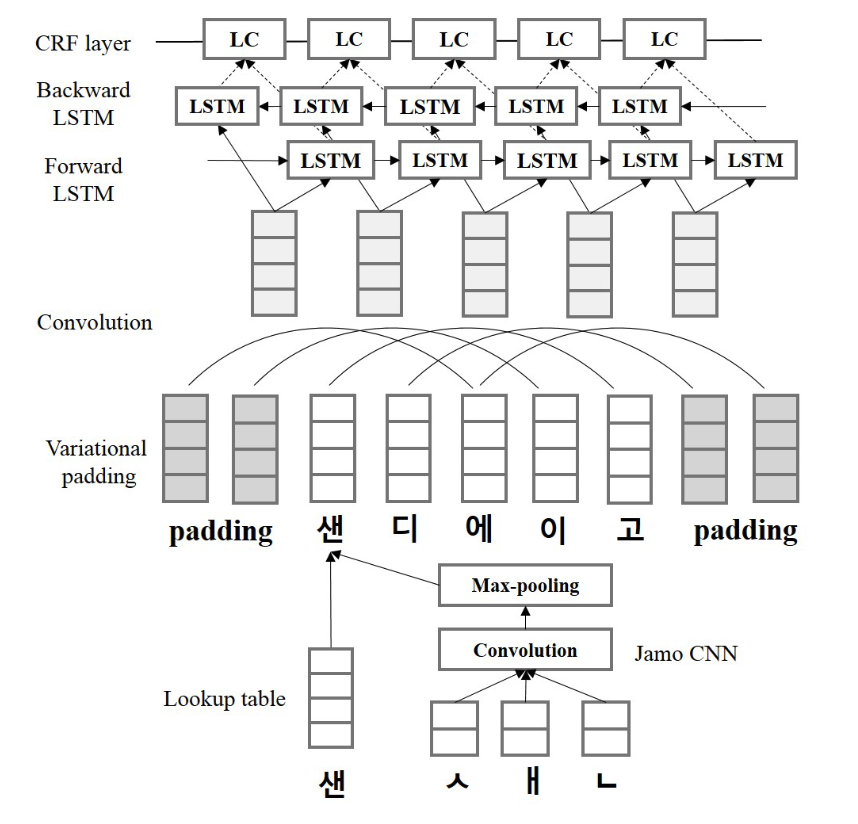

Yejin Kim, Yekyung Kim (equal contributions) The International FLAIRS Conference Proceedings, 2020 |

|

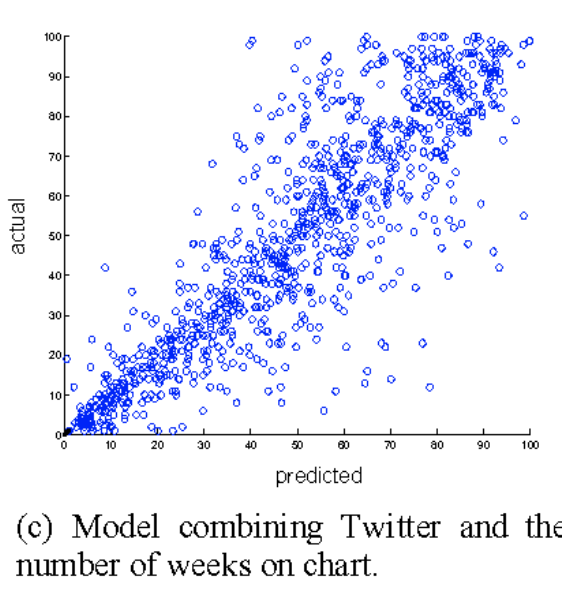

Yekyung Kim, Bongwon Suh, Kyogu Lee Workshop on Social Media Retrieval and Analysis (SoMeRA) at SIGIR, 2014 |

|

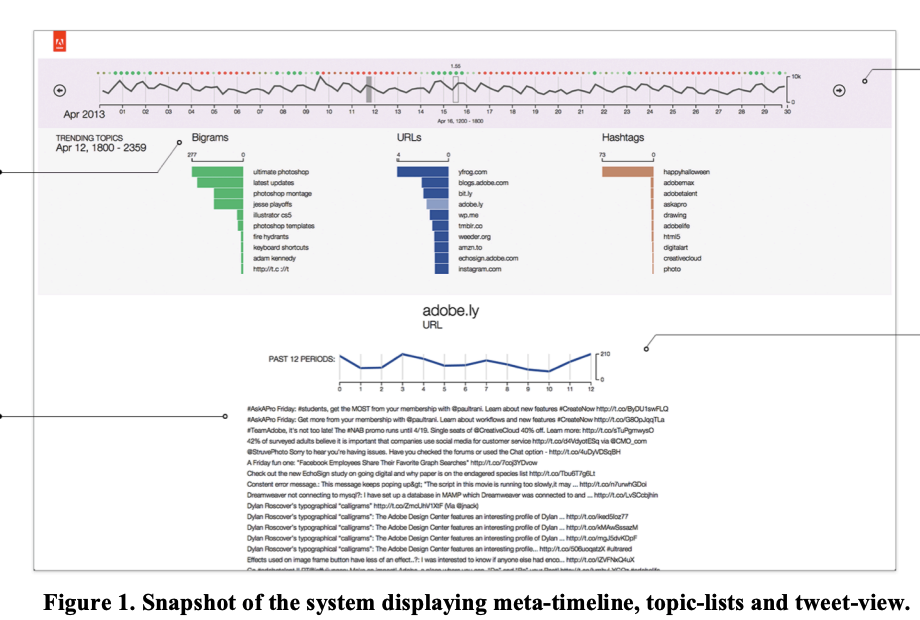

Ramik Sadana, Yekyung Kim, Bongwon Suh, Eunyee Koh Industry day at SIGIR, 2014 |

|

|

|

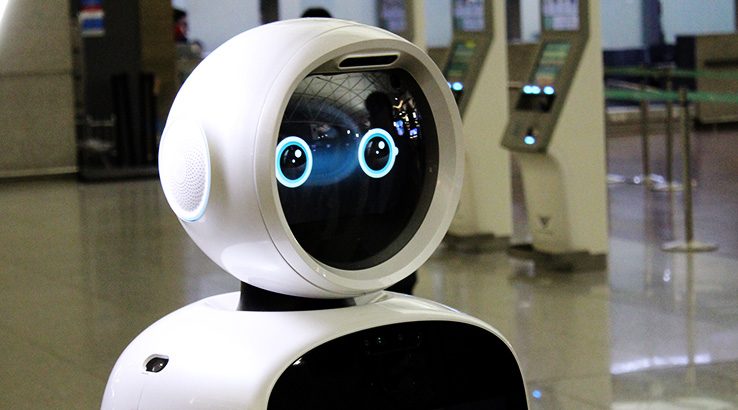

Airstar - Incheon Airport Robot, LG Electronics |

|

AI assistant for car, Hyundai |

|

Chatbot for home-appliances, LG Electronics |